I have a lovely OKI MB480 printer. It's been reliable for 10 years. And I want to display it's status in HomeAssistant.

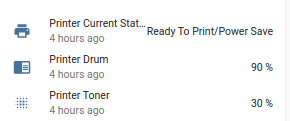

Like this...

The printer speaks SNMP and Home Assistant has an SNMP Sensor, so let's learn some SNMP and find a way to make the two talk to each other.

SNMP keeps it's overhead low by not transmitting a lot of information. What is transmitted is compressed by encoding. Here's an example:

$ snmpget -v 1 -c public 10.10.10.3 .1.3.6.1.4.1.2001.1.1.1.1.2.20.0 iso.3.6.1.4.1.2001.1.1.1.1.2.20.0 = STRING: "Ready To Print/Power Save"

Each of those numbers has meaning, so you need to know exactly what to ask for. Also, there is a client-server (manager-agent) arrangement to figure out (and install), three different versions of SNMP, and finally migrating a successful query into a Home Assistant format.

How to ask SNMP a question

The printer has a built in SNMP agent (server). Let's install an SNMP manager (client) on my laptop.

$ sudo apt install snmp

Now we can make two simple queries: walk (return a whole tree) and get (return one item). the tree may be quite lengthy -- on this printer, it's 1900 lines.

$snmpwalk 10.10.10.3 snmpwalk: No securityName specified

Oops, we are missing two more elements:

- A version number. We're going to stick with version 1, the easiest.

- A community name. This is somewhat like a username; it defines access. Communities get replaced by real usernames and passwords in version 3. The most common community name is "public"

These are defined by the remote agent (server). For example, the printer supports v1 and v3, but not v2.

$ snmpget -v 1 -c public 10.10.10.3 .1.3.6.1.2.1.1.5.0 iso.3.6.1.2.1.1.5.0 = STRING: "OKI-MB480-224E59" $ snmpwalk -v 1 -v public 10.10.10.3 > walkfile // Use redirection for lengthy output

Finding the right question to ask

Now that we have connectivity, we need a dictionary to understand all those number encodings. That dictionary is called a MIB file. It's a structured text file that defines all of the numbers and positions and response codes.

- The SNMP package that we installed has MIBs disabled by default. Enable them.

- Edit the /etc/snmp.conf file

- Comment out the "mib:" line

- Install the package of standard MIB files.

sudo apt install snmp-mibs-downloader

The MIB for my printer wasn't in the package. I foind it online, downloaded it, and stored it in /home/$ME/.snmp/mibs/. The snmp command automatically looks for MIBs there, too.

Here's the same query using the proper MIB as a dictionary:

$ snmpget -v 1 -c public -m OKIDATA-MIB 10.10.10.3 sysName.0 SNMPv2-MIB::sysName.0 = STRING: OKI-MB480-224E59 $ snmpget -v 1 -c public -m OKIDATA-MIB -O n 10.10.10.3 sysName.0 // '-O' formats output. 'n'=numeric .1.3.6.1.2.1.1.5.0 = STRING: OKI-MB480-224E59

So now it's a matter of using snmpwalk to locate the fields that I want to ask for. I chose three fields:

- Current Status: OKIDATA-MIB::stLcdMessage.0

- Drum Usage: OKIDATA-MIB::usageDrumCurrentLevel.1

- Toner Percent Remaining: OKIDATA-MIB::usageTonerCurrentLevel.1

Obtain the correspiding numeric code (called an OID) for each field using the -On flag, and test the OID without the MIB.

$ snmpget -v 1 -m OKIDATA-MIB -c public -O n 10.10.10.3 usageDrumCurrentLevel.1 .1.3.6.1.4.1.2001.1.1.1.1.100.4.1.1.3.1 = STRING: "2298" $ snmpget -v 1 -c public 10.10.10.3 .1.3.6.1.4.1.2001.1.1.1.1.100.4.1.1.3.1 SNMPv2-SMI::enterprises.2001.1.1.1.1.100.4.1.1.3.1 = STRING: "2298"

Migrating a successful query into Home Assistant

Here's what the same SNMP query looks like in a Home Assistant config:

Sensor:

- platform: snmp

version: 1 # Optional: Default is 1

community: public # Optional: Default is public

host: 10.10.10.3

baseoid: .1.3.6.1.4.1.2001.1.1.1.1.100.4.1.1.3.1

name: Printer Drum Remaining

unit_of_measurement: '%'

# A drum lasts about 25,000 impressions. Convert usage to a percentage of 25,000

value_template: '{{ 100 - ((value | int) / 250.00 )) | int}}'